The GTC AI conference is over, but registrants still have exclusive access to on-demand sessions through April 8.

Brilliant Minds. Breakthrough Discoveries.

Explore the 2024 GTC AI conference.

Thank You for an Amazing GTC

Live From GTC Featured Episodes

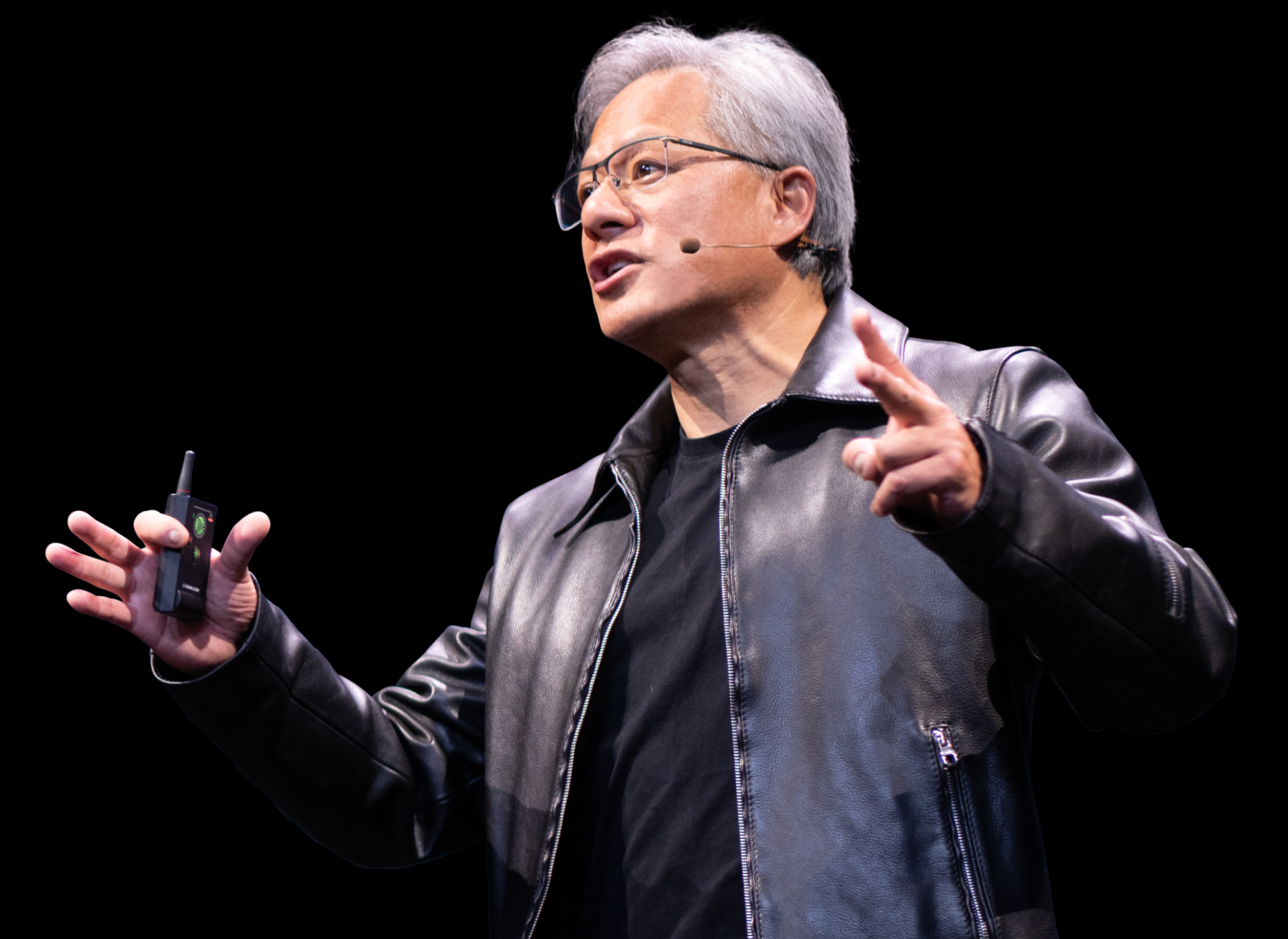

Jensen Huang | Founder and CEO | NVIDIA

Don’t Miss This Transformative Moment in AI

Watch Jensen Huang’s keynote as he shares AI advances that are shaping our future.

Check Out Sessions Chosen Just for You

Check Out These Groundbreaking Panels

Hear Big Ideas From Global Thought Leaders

Diamond Elite Sponsors

Diamond Sponsors

Learn, Connect, and Be Inspired

- DLI

- developer

- stsrtup

See What Attendees Say About GTC

Stay on top of technology advancements and discover expert insights—on your schedule.